blog

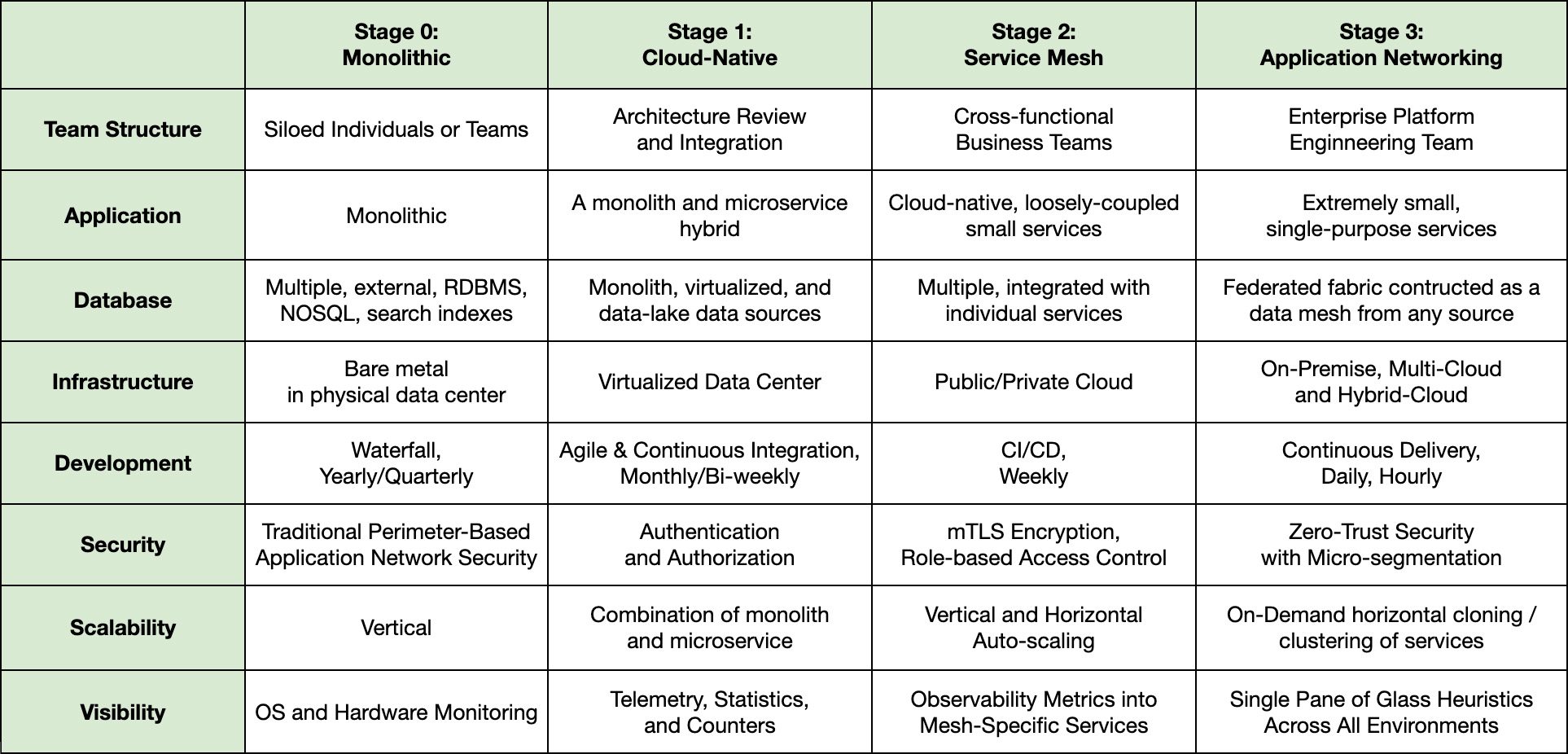

The Microservices Maturity Model

Understand the challenges of microservices adoption

and where most teams get stuck on their journey

to accelerate software delivery, while ensuring security.

January 13, 2023

After working with mission-critical, defense and intelligence agencies within the U.S. government over the last seven years to deploy modern software applications in some of the most demanding environments worldwide, we have developed an application networking maturity model that helps software architects, DevOps and platform engineering teams drive microservices adoption.

This maturity model defines four different stages of adoption that organizations pass through on their journey to realize the promise of microservices to achieve greater application agility, flexibility, and scalability, while eliminating known trade-offs in complexity, security, and visibility.

Our customers have found this methodology helpful in understanding the operational benefits of each stage. This approach helps address the technical challenges of moving from one stage to the next as our customers accelerate software delivery and increase speed to market. It also ensures security across hybrid, multi-cloud and on-premises environments.

Stage 0: Pre-Cloud

A monolith architecture, usually coupled with a pre-cloud suite of tools, consists of several applications running on servers in an on-premises data center. It requires large development teams to integrate separate code changes. Of course, each of these changes require detailed validation before new versions can be pushed out on a quarterly or annual basis.

Slow going

This process is slow and requires considerable effort for developers to update applications. Quality control and assurance are integral to ensure success. This has to happen before anything is deployed to production. Upgrades usually take many months and often require specific outage windows.

Lastly, significant planning for data migrations and potential rollback strategies must be considered in the case that something goes wrong. The lightning pace of business today means organizations can no longer afford to hide out in this stage. Those releasing applications sporadically cannot expect to remain competitive.

For example, many industries, such as healthcare and finance, still need to ensure customers can access the data stored in legacy systems, applications, and databases. However, at the same time, they must figure out how to build modern, user-friendly, customer experiences in new cloud and mobile applications.

Stage 1: Cloud-Native

Most organizations have moved past the monolith stage, introducing cloud-native technologies and microservices as the bridge between legacy and modern applications. They have begun to break portions of their monoliths into separate microservices to accelerate software development. Containers are adopted to package their software. At this point, these organizations have also implemented more agile software development practices. These could include Continuous Integration and Continuous Delivery (CI/CD) pipelines. CI/CD pipelines offer faster application delivery, but can often come at the expense of enterprise security and governance.

In this stage, individual teams build separate pieces of an application using their preferred programming languages, development frameworks, and logging tools. They often download different sets of open-source tools, libraries, and components. These are then stitched together, requiring expertise and knowledge of how solutions are composed at a granular level. The challenge is how best to connect these components to enterprise assets (databases, ERP tools, services, and core business layer functions), while managing one holistic application.

Enter the Modernization Pilot

The modernization process usually begins with a pilot application. This pilot may consist 6-12 microservices running in a cloud-native environment, such as a managed Kubernetes cluster. Many organizations in the cloud-native stage have begun implementing Docker and Kubernetes, running upwards of 30-50 Kubernetes clusters. However, most do not have extensive enterprise-wide Kubernetes or cloud-native experience.

Legacy applications sit behind hardened firewalls, where organizations can manage them on servers or virtual machines. However each microservice in a cloud-native environment is a separate networked application unto itself, with limited control, security, and visibility. In fact, the more a monolith is broken into separate microservices, the more surface area there is available to attack. This makes it much harder to meet existing compliance requirements with new cloud-native applications, while auditing, reporting, and proving that Kubernetes is secure.

IT teams are often left frustrated without being able to understand, control, and see what is happening in applications they are managing. As one can imagine, this frustration grows in line with the increased complexity of deploying decentralized software with microservices, APIs, and data sources across hybrid and multi-cloud environments.

Stage 2: Service Mesh

Organizations in this stage begin to evaluate the need to deploy a service mesh. Service meshes provide a de-coupled layer away from the application code. They are designed to control configuration policies, route internal east-west application traffic, and enforce security across an application networking stack. This stack includes connected microservices, APIs, and data sources.

Security is the number one driver for organizations to move from Stage 1 to Stage 2. Because each connected microservice, API, and data source runs in different locations, IT teams must secure the connections between any data or communications flowing between “Application A” and “Application B” or vice versa.

This process is handled with mutual Transport Layer Security (mTLS). mTLS ensures that all communications are encrypted when flowing between any applications, APIs, and microservices running within the Enterprise. In most cases when considering service mesh, these communications are between applications that are running within a Kubernetes environment.

mTLS, but now what?

Once IT teams prove their cloud-native applications are secure using a service mesh with a control plane and a fleet of data planes (proxy or proxy-less), they then require visibility and control. A service mesh allows them to gain that visibility into application performance and allocate the right resources to important applications. Then they can begin to comb through statistics allowing them to trace and troubleshoot problems as they arise.

DevOps can now start to work with Application Development teams to see how their applications are running, ensure resiliency, and meet SLA’s, SLO’s, and other metrics. At this point, they are betting that mTLS encryption will be enough to meet security team requirements. They believe they are finally ready to roll out into production.

This is where most companies gets stuck. These organizations realize that in a dev environment. service mesh is not enough. Put simply, the enterprise needs more than a service mesh to control complexity in a live production environment and manage different clouds, VMs, Kubernetes clusters, and exposed APIs.

Stage 3: Application Networking

In order to move from Stage 2 to Stage 3, DevOps and platform engineering teams now enter a world of exponentially greater complexity. Decentralized, microservices-based applications in a live, production environment focused on Day 2 operations are much harder to manage at scale.

Organizations at this stage have implemented a service-centric enterprise with many applications connected to APIs and managed data services. Some organizations may have a service mesh and follow modern CI/CD processes for deploying new application changes in cloud-native environments. This may include separate teams working on different parts of an application running on AWS, Azure, or Google Cloud.

However, once an application is ready to go into production organizations need to allow users to access it’s various parts. IT teams want to integrate SSO, Microsoft Active Directory or an independent IAM tool with their service mesh, Kubernetes, and applications. But most struggle to do so.

Identity Management Challenges

This is when identity management, user authentication, role-based access control, and user-tracking audits become barriers to wide-scale adoption. IT teams must grapple with managing an ever-growing suite of third-party, cloud-native, middleware to connect legacy databases, APIs, microservices, and data sources across decentralized applications.

It’s inefficient to have separate AWS admins, Azure admins, database admins, and network admins configure the right settings for different users.

Additionally, CISOs and security teams need to know what controls are in place. They need to know who has access to which applications, and ensure that the applications are isolated in multi-tenant environments. In addition, they must also prove compliance with security industry best practices across public and private clouds.

Most organizations don’t have the internal experience to manage this level of complexity. Furthermore, IT teams that have implemented service mesh solutions often realize they can’t move their applications to production without a more comprehensive microservices solution. And failing that, they will require a large amount of out-sourced services expertise.

Greymatter.io Helps Enterprises Move to Stage 3

These are the same challenges the world’s largest defense and intelligence agencies ran into. Their also why Greymatter.io has spent the last seven years focusing its efforts to build the best and most enterprise-ready application networking platform. Greymatter exists to address the issues Stage 0, 1, & 2 organizations face when moving to Stage 3.

We built an enterprise application networking platform that combines service mesh, API management and infrastructure intelligence, helping organizations reduce complexity, ensure security, enforce compliance, and optimize performance across any environment.

We understand that application networking and service-centric enterprises extend beyond Kubernetes. Organizations still use monoliths, VMs, and bare metal. Our platform was built as a bridge to the future. Greymatter is built for enterprises that want the benefits of APIs, microservices, and service mesh now — in months, not years.

We can help, no matter the stage

So, if you’re at Stage 0 or 1, we can help. We can start by helping you lay out your reference implementation architecture in 30 days or less. Here, we will start outlining how to make sure everything integrates. This will help prevent you from hitting roadblocks when you attempt to move from Stage 1 to Stage 2.

If you’re stuck in the mud in Stage 2 or spinning your wheels reaching for Stage 3, we can help. Or if you are in Stage 3 and realize that the increased complexity of managing a decentralized environment is consuming your platform engineering resources, we can help there too. Our technologists can assess your application networking architecture. And we can implement a model that enables zero-trust security, mTLS authentication and end-to-end encryption in 90 days or less.

Contact us today to schedule your free consultation. First, we will help determine your microservices maturity level. Then we will build a reference implementation architecture to begin moving your organization up the microservices maturity model. Accelerate software delivery and increase speed to market, while ensuring security today, with Greymatter!